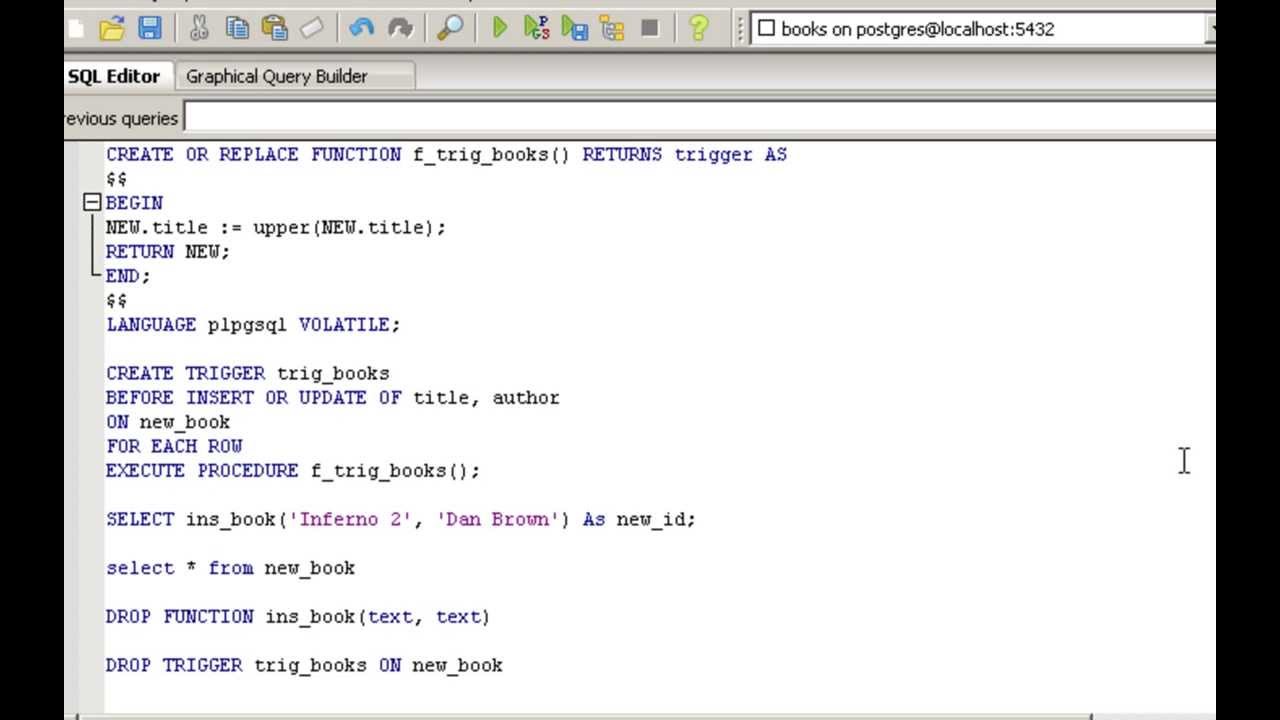

CREATE OR REPLACE FUNCTION guarded_swap(src text, dest text) RETURNS void AS WHERE contype = 'p' AND conrelid = dest::regclassįORMAT ('ALTER TABLE %s DROP CONSTRAINT IF EXISTS %s ', dest, conname)ĮXECUTE FORMAT('ALTER TABLE %I RENAME TO %I ', dest, CONCAT(dest, '_old')) ĮXECUTE FORMAT('ALTER TABLE %I RENAME TO %I ', src, dest) ĮXECUTE FORMAT('ALTER TABLE %I RENAME TO %I ', CONCAT(dest, '_old'), src) Īnother thought: PKs and FKs might be unnecessary on tables that are only used for analytics purposes. SELECT FORMAT('DROP INDEX %s ', i.relname)įORMAT ('ALTER TABLE %s ADD CONSTRAINT %s %s ', src, SELECT FORMAT('%s ', REPLACE(pg_get_indexdef(ix.indexrelid), dest, src))

#Postgres copy data from one table to another code

For option 3 above, I think the code below is close to what I want. This question and this question really helped. On table dest I have (unique) primary key on column id, and indexes on timestamp columns. I am also thinking about swapping the 2 tables by renaming them, but this requires the indexes on one table being copied to the other.I am thinking of dropping/disabling the index on dest before the data copy step and add it back right after.

What's a better way to achieve the same goal?.The issue with my function above is that data copy can be very expensive because of the indexes and constraints on the dest table. Note that the actual production table( dest) has constraints and indexes, while the staging table( src) is configured with NO indexes or constraints to speed up the loading process from ETL. The idea is to truncate the dest table and load it with data from the src table, if the src table(staging table) has more rows than the dest table(actual production table). Here is a copy function I created for this purpose: CREATE OR REPLACE FUNCTION guarded_copy(src text, dest text) RETURNS void ASĮXECUTE FORMAT('SELECT COUNT(*) FROM %I', src) INTO c1 ĮXECUTE FORMAT('SELECT COUNT(*) FROM %I', dest) INTO c2 ĮXECUTE FORMAT('TRUNCATE TABLE %I CASCADE ', dest) ĮXECUTE FORMAT('INSERT INTO %I SELECT * FROM %I ', dest, src) cursor2.execute ('INSERT INTO table2 result') I've also used pgdump -s to replicate the same schema of the first database in the second. result cursor1.execute ('SELECT from 'table1'') I'm trying to do something like. To increase availability of the tables, I decided to use staging tables to load the downstream data from our ETL pipeline first, and if they get loaded successfully, copy the data over to actual production tables. As of now, I have 2 cursors for each database. The loading process takes pretty long and is not bullet-proof stable yet. due to some legacy setup I can't use incremental updates here). The tables get flushed and reloaded by our ETL pipeline on a nightly basis (I know.

On my Postgres server I have a few tables that are constantly accessed by business intelligence applications so ideally they should stay available most of the time.

0 kommentar(er)

0 kommentar(er)